How to Scrape Google Maps: 4 Methods (Code & No-Code)

With over 200 million business listings on Google Maps globally, this platform is a goldmine of information for marketers, researchers, and developers.

Whether you want to gather leads, analyze competitors, or build location-based services, you may need to scrape Google Maps at scale to gather large amounts of Google Maps data like addresses, phone numbers, and ratings. However, getting that data in bulk can be challenging due to anti-scraping measures and Google’s terms of service.

In this guide, we'll explore four methods to do it: writing a Python web scraping script with Selenium and residential proxies, using the official Google Maps API, leveraging unofficial third-party APIs, and trying no-code scraping tools.

What data can you scrape from Google Maps

Google Maps contains a wealth of public data about local companies that can be collected via web scraping. Typically, when you view a business listing on Google Maps, you can see details such as:

- Company name (the title of the place)

- Address (street address, city, postal code)

- Phone number

- Website URL

- Business category or type (e.g., restaurant, hardware store)

- Average rating (star rating) and number of reviews

- Opening hours (business hours for each day)

- Price level or menu (for restaurants, etc.)

- Photos and images of the location

- (Sometimes) Additional info like popular times, amenities, or a short description

All of the above Google Maps data is visible on the public listing page for a company. Using various methods, you can extract data for these fields across many businesses.

For example, you might collect a list of all restaurants in a city with their address, phone, and rating. However, note that some information (like the text of individual reviews or owner-provided updates) may require extra steps to scrape and can be more challenging.

Also, personal information isn't typically present on Google Maps business pages, since it's focused on companies and places.

Scraping Google Maps with Python & Selenium + proxies

One coding-based approach to extract data is to use Python with Selenium (a browser automation tool) to gather data from Google Maps directly. Essentially, this web scraping method automates a real web browser to navigate Google Maps, perform searches, and collect results. Here's how it works and some best practices:

How it works: You can write a Python script that launches a headless browser (Chrome or Firefox) via Selenium. The script goes to maps.google.com, enters a search term (e.g., "coffee shops in New York"), then simulates scrolling or clicking through the list of company listings.

As each listing's details load, the script parses the page’s elements (like the name, address, etc.) and saves them. Finally, you can save all the scraped data to a file (for example, a spreadsheet).

Basic steps with Selenium

- Set up Selenium: Install the Selenium library and download a WebDriver (like ChromeDriver). Write a script to launch a browser instance. Use headless mode to run without a visible window.

- Load Google Maps: Navigate to the Google Maps website. You may need to handle an initial consent pop-up (especially in EU regions) by clicking "Accept" or "Reject all".

- Search for a query: Find the search box element, input your target query, and submit. For example, search for your desired search term (e.g., "restaurants in London"), then press enter.

- Scroll through results: The results panel on the left can be scrolled to load more results. Use Selenium to scroll the results container or click a "More results" button (if present), loading as many listings as allowed (Google Maps might show up to a certain number of results, often around 20–120 per search).

- Extract details: For each listing element, extract the data (text) such as name, address, etc. You might need to click each listing to get additional details in the side panel. Alternatively, some info (like address and rating) is visible in the list view itself.

- Output the data: Store the collected data in a structured format. For instance, append each company entry to a list and then write out to a CSV file for easy analysis (effectively exporting data for later use).

Tips to avoid blocking and errors

Scraping Google Maps is not trivial because Google has strong anti-scraping measures. Here are some best practices to improve your success:

- Add wait times and retries: Don't scrape too fast. Use short time.sleep() delays or Selenium explicit waits between actions. Let the page load fully before trying to get elements. If something fails to load or you get an error, implement a retry mechanism with back-off timing.

- Rotate proxies and user-agents: Google may block your IP if you send too many requests quickly or from one address. Using a pool of proxies (different IP addresses) helps distribute requests, especially for large-scale data extraction. Also rotate the User-Agent header (pretending to be different browsers/devices) so your scraper doesn’t always appear as the same script.

- Use a headless browser stealthily: Running in headless mode is convenient, but some websites can detect headless Chrome. Consider using tools or settings that reduce detectability (for example, using an undetectable ChromeDriver or adding proper browser flags).

- Avoid login or private data: Stick to scraping publicly available company info only. Do not attempt to scrape content that requires logging into a Google account or any private user data. Not only is that against Google’s policies, it’s unnecessary for company listings (which are public).

- Handle errors and CAPTCHAs: If Google presents a CAPTCHA or "Are you human?" prompt, your scraper should pause or stop. You might solve it manually or implement a CAPTCHA-solving service, but hitting these challenges is a sign you're scraping too aggressively. Back off the request rate, use more proxies, and try again.

Using Python and Selenium gives you full control and flexibility. You can scrape any piece of information that’s visible on the site. This approach is great for one-off projects or custom data gathering.

However, it does require coding skills and ongoing maintenance (Google’s site structure can change, which might break even the best scraper). It’s also relatively slow compared to using an API, since a real browser has to load each page.

For many use cases, especially if you need hundreds or thousands of records, you'll want to use proxies and careful tactics to avoid blocks.

With patience and tuning, though, this DIY method can collect a substantial amount of Google Maps information without using the official API.

Using the official Google Maps API

Google provides an official interface for places and location data called the Google Places API (part of the Google Maps Platform). This API allows developers to retrieve structured data about places (companies, landmarks, etc.) via HTTP requests, without having to scrape Google Maps yourself.

It’s the Google Maps API method for collecting company info and is fully compliant with Google’s terms (though not free beyond certain limits).

Setup requirements: To use the official API, you need a Google Cloud account:

- Create a Google Cloud project and enable the Places API (or Places API Web Service) on it.

- Set up billing for your project (Google requires a valid credit card and gives you some free credit). Reminder: to use the Places API, you must enable billing and include an API key or OAuth token with your requests.

- Obtain an API key and restrict its usage (for security, tie it to your app’s domain or use an environment variable).

Once you have an API key, you can call various endpoints of the Places API to get data:

- Place Search: Use Text Search or Nearby Search endpoints to find places by a query or geographic area. For example, you can search for "pizza in Chicago" or get all companies of a certain type within a 5km radius. The response returns a list of place IDs and basic info (name, address, coordinates, etc.) for up to 20 results per page (you can paginate with a token to get more, up to a limit).

- Place Details: Given a specific place ID (for example, obtained from a search), you can request detailed information about that place. This includes address, phone number, website, opening hours, average rating, total number of reviews, and more. (The Places API will return up to 5 sample reviews per place in the details response.)

- Place Photos: If you need photos, the API provides a way to fetch a photo by referencing a photo ID returned in a place detail response.

- Geocoding (and other APIs): Depending on your project, you might also use the Geocoding API (to convert addresses to coordinates or vice versa) or other Google Maps Platform services. These are separate from the Places API but can complement your data collection.

Using the official API is straightforward for developers: you make an HTTPS request (for example, using Python’s requests library or Google’s client libraries) and get JSON data in return. There’s no need to parse HTML or deal with page loads, and you won’t run into front-end anti-scraping measures.

Pros of the official API

- Reliable and legal: This method is sanctioned by Google. As long as you abide by their usage limits and terms, you won't get blocked. The data comes directly from Google’s servers in a structured format.

- No maintenance of scrapers: You don’t have to worry about site layout changes breaking your code. Google maintains the API and its data format.

- Comprehensive coverage: Google’s database is huge. You have access to information on millions of places worldwide, the same data that appears on Google Maps.

Cons and limitations

- Cost: The API isn’t completely free. Google offers a $200 monthly free credit, which currently equates to about 10,000 free API calls per month. Beyond that, you pay per request (e.g., roughly $17 per 1000 Place Detail calls, though pricing can vary by data field and region). For small projects, this is fine, but for very large data pulls, the cost can add up quickly.

- Data limits: The official API doesn’t expose every piece of info that the Google Maps website might show. For instance, the API returns only a handful of reviews (not all reviews), and it doesn’t provide business email addresses. It also limits how many results you can get for a single search query (often a max of 60 results for Text Search, similar to the website’s pagination limit).

- Usage terms: When using Google’s API, you agree to their terms of service. This includes certain usage restrictions, for example, if you display the data in your app, you may be required to also display it on a Google Map. If you’re just storing data for analysis, make sure you’re compliant with their policies about data usage and retention.

Despite these limitations, the official Google's API (Places API) is the safest and most stable way to get Google Maps data. It’s best suited for applications where you need to integrate data on the fly (like showing nearby locations in your app) or for moderate-scale data gathering within Google’s guidelines.

If your data needs are very large or you need info that the API doesn’t provide (like all reviews), you might consider the other methods below.

Using unofficial APIs

What if you want the ease of an API but don’t want to go through Google’s setup or face the API’s data restrictions? There are third-party services that provide their own Google Maps scraper APIs. These services essentially do the heavy lifting of scraping Google Maps for you and offer results via an API or downloadable file.

For example, Apify offers a Google Maps Scraper actor that you can run by providing parameters (like a search query or geographic area), and it will return all the results it can find in that region, complete with details.

Another service, Outscraper, provides an API specifically designed for Google Maps data extraction. You send it a search query or a category and location, and it returns the structured data (JSON, CSV, etc.).

Services like these handle web scraping behind the scenes using their own proxy networks and headless browsers. They can often gather more data than the official API allows.

For instance, a well-built unofficial scraper can retrieve hundreds of results by automatically paging through, and even fetch details like all user reviews or photos if needed, bypassing Google’s normal UI limits.

How it works

Using an unofficial API is usually as simple as making an HTTP request to their endpoint with your query and an API key from the provider. For example, you might call a URL with something like:

?query=pizza+in+Chicago&apikey=YOURKEY

The third-party service will then perform the search on Google Maps, scrape the results, and return data to you in JSON or CSV format. You don’t see the scraping process. It’s all on their end.

Pros

- No infrastructure for you: You don’t have to manage browsers, proxies, or CAPTCHAs. The service handles all those anti-scraping hurdles.

- High volume ready: These providers are designed for large-scale data extraction without getting blocked. If you need tens of thousands of records, they can often deliver (though you’ll pay accordingly).

- Rich data output: Unofficial scrapers often extract data beyond what the official API gives. For example, some will crawl each company’s website to find emails or social media links, or they’ll fetch all the Google Maps reviews for a place, etc., giving you a more complete dataset.

Cons

- Cost: Third-party APIs are usually paid services. Depending on the provider, you might be charged per request or per data unit (e.g., per place or per 1000 places). Sometimes these can be cheaper than Google’s pricing for large jobs, but not always: you have to compare. There might be free tiers or trial credits, but large jobs will cost money.

- Dependency on a third party: When you rely on an unofficial API, you are trusting another company to do the scraping correctly. If Google’s site changes or if the service has downtime, your project is affected. There’s also some vendor lock-in to consider (if you build your system around their API, switching later might require some work).

- Terms of service risk: These services are scraping Google on your behalf, which is against Google’s terms of service. The legal risk is generally shouldered by the provider, not you, but it’s still technically a gray area. Use the data responsibly. Also, the extracted data is still subject to Google’s copyright and fair use considerations, even if you didn't scrape it yourself.

In summary, unofficial scraping APIs like Apify, Outscraper, ScrapingBee, SerpAPI, etc., are great when you need a lot of Google Maps data quickly and you’re willing to pay for convenience. They’re popular for lead generation (e.g., getting a list of all plumbers in a state with contact info) and other bulk data needs.

Just be mindful of the cost and ensure the service is reputable, since you’ll be relying on their data accuracy and ethics.

No-Code data extraction with 3rd party tools

If you’re not a programmer or you just want a quick way to get a list of places without writing code, no-code tools can help. There are several third-party solutions (some online, some as browser extensions or desktop software) that allow you to scrape Google Maps with a visual interface and zero coding.

For example, GMapsExtractor is a Chrome extension marketed as a no-code Google Maps scraper. With a tool like this, you typically do something like: go to Google Maps in your browser, perform a search (enter your search term) (e.g., "libraries in Toronto"), then click the extension’s button to start scraping.

The tool will automatically scroll through the results and gather the details. Once done, you can download the data it collected as a CSV or Excel file. In the case of GMapsExtractor, it's reportedly a one-click process to get all the results, and then you can export to CSV/JSON/Excel. In other words, the extension is performing the web scraping for you in the background.

Pros:

- Ease of use: As the name suggests, you don’t need to write code or be technically inclined. The tools are designed for anyone to use.

- Great for small jobs: If you just need to grab a few dozen or a few hundred listings for a one-time project, a no-code tool can often do it in minutes. This is perfect for scenarios like compiling a local leads list or doing market research in a pinch.

- Visual process: You often get to see the scraping as it happens (in the case of an extension, it might scroll Google Maps in your browser). This can be satisfying and lets you verify you’re getting the data you want. The output is usually ready to use, with options to save to CSV or Excel directly.

Cons:

- Limited scalability: No-code tools running in your browser are constrained by your machine and IP. Scraping thousands of entries this way can be slow or lead to your IP being temporarily blocked by Google. Cloud-based no-code services can handle a larger scale, but they often come with subscription costs and still have limits.

- Less flexibility: Each tool extracts a preset list of data fields. If the tool doesn’t capture, say, a company’s latitude/longitude or some niche field, you might not have the ability to add that. With your own code, you could extract anything you find on the page.

- Reliability: Free or cheap scraping tools can break when Google changes its site layout. You then have to wait for the tool developer to update it. Also, be cautious about security: use well-known tools, since a browser extension that can scrape data could potentially also see your other web activity.

- Data accuracy: Because you’re relying on a pre-built method, always double-check a portion of the results. There could be occasional errors or missed entries if the tool didn’t scroll fully or encountered a pop-up.

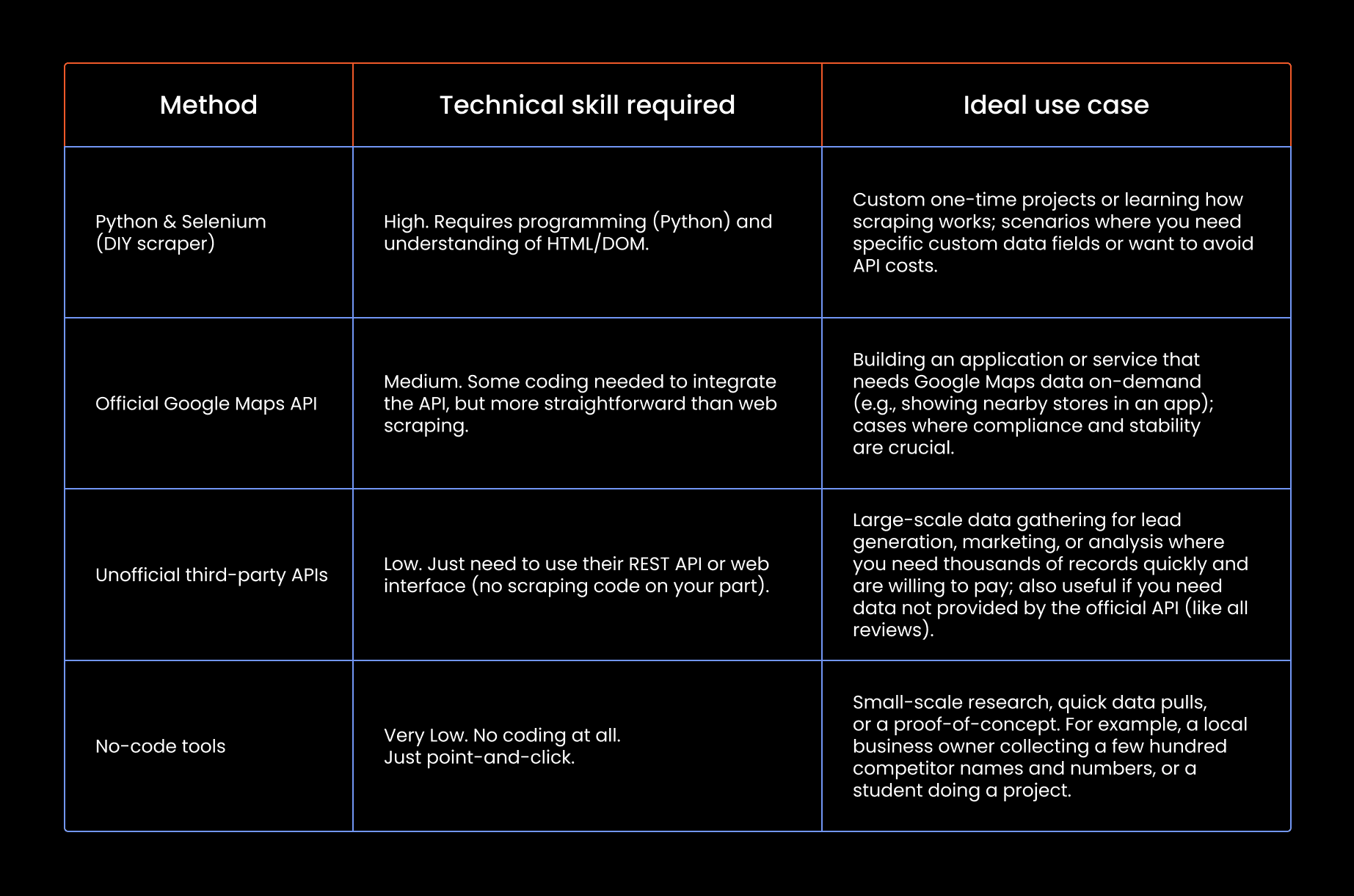

When to use each method

We’ve covered four different methods to get Google Maps information. Which one should you choose? It depends on your project’s requirements, your technical skills, and the volume of data you need. Here’s a quick comparison to help you decide:

Final thoughts

Whichever method you choose, use the data responsibly and ethically. Remember that while company information is public, Google’s platform and terms still apply if you’re scraping their service. If you’re looking for more tips or just want to share your experiences, make sure to visit our Discord community!

What is the best tool to scrape data from Google Maps?

The best tool depends on your needs. Use no-code tools for quick tasks, APIs for reliability, or custom scrapers for flexibility and control.

Can I scrape business emails from Google Maps?

Google Maps doesn't list emails directly. You can often find them by visiting the company’s linked website.

Can I scrape company reviews from Google Maps?

Yes, but only a few via the official API. To get all reviews, you’ll need to scrape the website using code or a third-party service.

Is it legal to scrape Google Maps?

Scraping public data is generally legal, but it may violate Google’s terms of service. Use caution and scrape responsibly.